About the author: I'm Charles Sieg, a cloud architect and platform engineer who builds apps, services, and infrastructure for Fortune 1000 clients through Vantalect. If your organization is rethinking its software strategy in the age of AI-assisted engineering, let's talk.

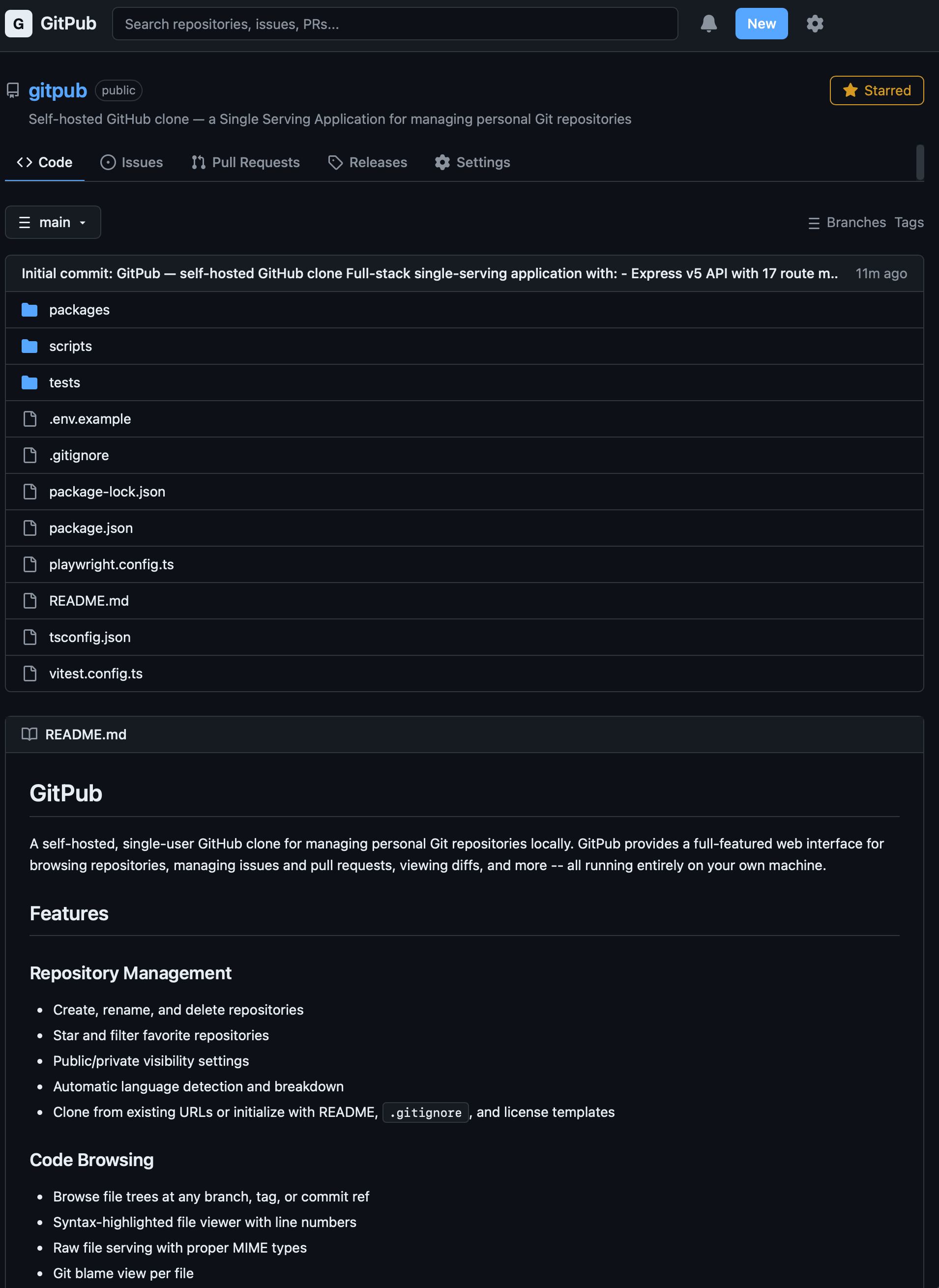

I cloned GitHub. The result is a full-featured, single-user Git hosting platform with repository management, code browsing with syntax highlighting, pull requests with three merge strategies, issues with labels and comments, releases, search, activity feeds, insights, dark mode, and 50+ API endpoints. 111 files. 18,343 lines of code. 155 passing tests. The whole thing took 49 minutes, entirely within the scope of a Claude subscription.

A human team would need eight weeks and $127,000.

The Biggest Clone Yet

The app is called GitPub. It is a Single Serving Application: a purpose-built, single-user web application that replaces a SaaS product. My previous clones replaced Harvest (~4,700 LOC), Trello (~6,800 LOC), and Confluence (~2,100 LOC). GitPub is nearly three times larger than all three combined.

That is GitPub hosting its own source code. A GitHub clone displaying the repo of a GitHub clone, rendered in a UI that was itself generated by AI. Turtles all the way down.

A Different Process, By Choice

My earlier clones each followed the same pattern: write a requirements document, hand it to Claude, walk away for 20 minutes, come back to a working app. For GitPub, I used four prompts instead of one. Claude could almost certainly have handled the entire thing from a single, sufficiently detailed prompt. I simply preferred to break it up conversationally: first the requirements, then a port tweak, then the technical design, then "build everything."

The end result was the same. Claude could almost certainly do this in a single shot if the prompt included all the instructions up front. The four-prompt split says more about my Thursday afternoon energy level than about any limitation of the model.

That said, the feature surface here is an order of magnitude larger than a Kanban board or a time tracker. The requirements document alone is 801 lines. The technical design is 2,135 lines. The scope covers repository management, code browsing, commits, branches, tags, pull requests with inline comments and merge strategies, issues, labels, releases, search across code and metadata, activity feeds, notifications, bookmarks, and settings. This is a serious application.

The Transcript

I kept a full transcript of every prompt I sent and every action Claude took. Here's the entire human side of the conversation:

Prompt 1: I described the goal (a single-user GitHub clone called GitPub) and asked Claude to create a comprehensive requirements document analyzing every feature GitHub has.

Prompt 2: I asked it to modify the design for local-first operation on ports 3060 and 3061.

Prompt 3: I asked for a technical design document based on the requirements.

Prompt 4: I asked it to create a test plan, create a build plan, then build the entire application, run all tests until they pass, write a README, and give me the URL.

That was it. Four prompts. Everything else was Claude working autonomously across four sessions, spawning eleven parallel agents, fixing its own integration bugs, and iterating until all 155 tests passed and the dev server was running.

The transcript is interesting because it exposes the shape of AI-assisted development at scale. Claude wrote the application in parallel, across multiple agents. In Session 2, it launched five agents simultaneously: one for the Git operations layer (1,545 lines), one for the SQLite database adapter (1,455 lines), one for core route handlers (8 files), one for feature route handlers (9 files), and one for all 31 frontend page components. When those finished, it spawned three more agents to fix the integration seams: Express v5 type mismatches, database method signature errors, TypeScript issues between layers. In Session 3, it created infrastructure files, fixed more issues, then launched three agents simultaneously for unit tests, E2E tests, and documentation.

This is the AI equivalent of a tech lead coordinating a six-person team, where every member shares perfect context, incurs zero communication overhead, and finishes in hours instead of weeks.

Planning Before Building

The 49-minute build time includes a step the previous clones omitted: all documentation was produced before a single line of application code was written. The requirements document, technical design, test plan, and build plan were all generated as part of this session. In the earlier clones, I wrote (or generated) the requirements separately and excluded that time from the total. Here, the clock includes everything from first prompt to running application.

For the first time in this series, I had Claude create two additional artifacts before writing any code: a test plan and a build plan.

The test plan itemizes every test that should be written: unit tests for validation schemas, the SQLite adapter (71 test cases across 10 resource types), the Git operations layer, all route handlers via supertest, frontend components, and Playwright E2E tests covering navigation, repository management, code browsing, issues, pull requests, search, and settings. It specifies coverage targets: 90% for shared code, 80% for the database layer, 70% for routes, 60% overall.

The build plan breaks the project into ten phases, from project foundation through polish. Each phase lists the specific files to create and the dependencies between phases.

These documents matter for two reasons. First, they force the AI to think through the full scope before generating code. The build plan becomes a contract with itself: a checklist it can execute against rather than making ad hoc decisions mid-stream. Second, they make the process auditable. When Claude spawns eleven agents and produces 18,000 lines of code, the plan and test plan let you verify that it actually built what it said it would build.

The test plan also addresses one of the gaps I noted in my essay on ephemeral apps: test coverage. My Harvest clone had 32 tests. GitPub has 155. That is a significant improvement, and the test plan documents what should be tested even if the current generation only implements a subset.

The $127,000 Question

After GitPub was complete, I asked Claude for a new artifact: an estimate of what it would cost to reproduce the same output with a human engineering team. The full analysis is worth reading, but here are the numbers:

| Metric | Claude | Human Team | Ratio |

|---|---|---|---|

| Calendar time | 49 minutes | ~8 weeks | ~470x faster |

| Total labor-hours | 49 minutes | ~1,240 hours | ~1,500x |

| Cost | Claude subscription | ~$127,200 | n/a |

| Lines of code | 18,343 | 18,343 | Same |

| Tests written | 155 | 155 | Same |

| Files created | 111 | 111 | Same |

The analysis breaks down three staffing options: a parallelized six-person team ($127,200 over 8 weeks), a small team of two senior engineers plus QA ($109,600 over 10 weeks), or a solo senior engineer ($79,200 over 18 weeks). The cheapest option, a single engineer working for four and a half months, still costs $79,200. The Claude work was done entirely within the scope of a monthly subscription.

The analysis is honest about the caveats. A human team might use an ORM instead of hand-written SQL, reducing database layer effort by 40%. A component library like shadcn/ui would accelerate frontend development by 30%. The estimates assume reproduction of this exact implementation; humans would make different architectural choices.

The analysis is equally honest about the risks that cut the other way. The isomorphic-git library is poorly documented for bare repository operations. Express v5 has breaking changes from v4 that are poorly documented as of early 2026. Integration bugs between layers are inevitable at this scale. A team unfamiliar with these specific technologies could easily spend 2-3x the estimated time on the Git operations layer alone.

The real insight is the category of the comparison. The difference spans three orders of magnitude in time and effort. That gap changes the nature of the decision entirely. At $127,000 and eight weeks, cloning GitHub is a serious business investment requiring budget approval, staffing plans, and quarterly milestones. Within a Claude subscription and 49 minutes, it becomes something you do on a Wednesday afternoon.

What It Actually Does

GitPub is a monorepo with three packages (shared types, a Node.js/Express backend, and a React frontend) backed by SQLite and isomorphic-git for pure-JavaScript Git operations with no native Git dependency.

The feature list:

- Repository management: create, rename, delete, star repositories with automatic language detection

- Code browsing: file tree navigation, syntax highlighting via Shiki, blame view, branch switching

- Commits: paginated history, full commit detail with diffs, compare view between refs

- Branches and tags: list with ahead/behind indicators, create, delete, rename, default branch management

- Issues: create, close, reopen, comment, label assignment, shared number sequence with pull requests

- Pull requests: full lifecycle with three merge strategies (merge commit, squash, rebase), inline comments, conflict detection, files changed with diff rendering

- Releases: create with tag, release notes, and asset uploads

- Search: global search across code, commits, issues, and pull requests with qualifier support (

repo:,language:,path:,is:,label:) - Activity and insights: dashboard feed, per-repo activity, language breakdown, contributor statistics

- Notifications and bookmarks: personal notification feed and bookmarked items

- Settings: light/dark/system themes, default merge strategy, branch naming conventions, timezone

- 50+ REST API endpoints following GitHub's conventions

The Git operations layer deserves a special mention. At 1,545 lines, it implements 24 methods for bare repository manipulation using isomorphic-git: branch and tag CRUD, tree and blob reading, commit log with pagination, a diff engine with an LCS algorithm, merge with three strategies, conflict detection, language breakdown, and archive generation. The cost analysis specifically flags it as the component most likely to blow a human team's schedule.

Scale Comparison

| Harvest | Trello | Confluence | GitPub | |

|---|---|---|---|---|

| Lines of code | ~4,700 | ~6,800 | ~2,100 | 18,343 |

| Files | n/a | n/a | n/a | 111 |

| Tests | 32 | 52 | 24 | 155 |

| Build time | 18 min | 19 min | 16 min | 49 min |

| Prompts | 1 | 2 | 1 | 4 |

| Parallel agents | n/a | n/a | n/a | 11 |

GitPub is the first SSA that required multiple build sessions, parallel agent coordination, and explicit planning documents. It's also the first where I had the AI produce a cost analysis, which I plan to do for future clones as a standard artifact.

What This Means

The previous clones proved that AI can replace SaaS subscriptions for simple tools. GitPub extends that proof to applications of genuine complexity, the kind of project that would occupy a real engineering team for months, delivered in hours.

Four prompts. Eleven agents. 18,343 lines. 155 tests. 49 minutes.

The requirements document is the product. Everything else is a build artifact.

Let's Build Something!

I help teams ship cloud infrastructure that actually works at scale. Whether you're modernizing a legacy platform, designing a multi-region architecture from scratch, or figuring out how AI fits into your engineering workflow, I've seen your problem before. Let me help.

Currently taking on select consulting engagements through Vantalect.